More languages

More actions

Our essays reflect only their author's point of view. We ask only that they respect our Principles.← Back to all essays | Author's essays The various ways I have used AI to improve ProleWiki

by CriticalResist

Published: 2025-10-24 (last update: 2025-10-24)

10-20 minutes

AI has become almost ubiquitous in some areas, and still draws heavy criticism in others. This essay does not intend to make a case for one or the other (that will have to be located inside a much more comprehensive and exhaustive guide), but as a starting point, here are some of the ways I have personally used AI to improve ProleWiki.

ProleWiki offers all of its content for free to its readers, without any expectations of getting anything back. We work for the reader, and AI has helped us bring that work to the people more efficiently, freeing up time that could be put in other problems. The point of this essay is to offer some ideas and spark creativity in LLM use cases.

These days my choice of LLM is Deepseek cloud.

Understanding MediaWiki structure

The first time I used AI (LLMs specifically) to work on ProleWiki was to help me understand how the MediaWiki software, which ProleWiki runs on, works. It was with AI help that I understood what namespaces are, what

A common argument against AI is that it makes people shut their brain off. On the contrary, I find that this is a choice. Read the output critically and double-check important stuff, and you will learn. MediaWiki is notoriously misdocumented, with important information that's hard to find or even non-existent. Some explanations given by the developers require several passes to understand, and sometimes miss the piece of information you actually want. However, there is a lot of mediawiki discussion online and so LLMs have a lot of training material on the software.

The first project I wanted to do was redo the library homepage, which used to be just a list. But I wasn't sure what exactly we were able to code on MediaWiki and how. I explained my idea in detail to an LLM, and it provided a step-by-step on how to do this. I realized it wasn't as difficult as I thought (we were looking at potentially hiring a dev for it, despite not having the funds for it) and I was able to code it myself. I just needed a tutorial which unfortunately didn't exist on the internet yet.

Today, I am the MediaWiki dev we would have had to hire otherwise. This reduces idle time and lets us respond quickly and efficiently to new bugs and features we want to bring to serve the readers.

With this help, I went from a level 1 MediaWiki user to a level 8 or 9, and I learn more every week as I am confronted with an emergent problem that we need to solve, which we'll see in the next sections.

Creating the new homepage

In late 2024, we introduced a new homepage to ProleWiki, here (and the old one archived here).

I am a designer by trade and so LLM reliance for this project was globally low. However, everyone has their blind spots and I am not different. In my case, it's difficult for me to get a project started, but once the ball gets rolling it becomes much easier. In the case of the new homepage, I followed the design thinking method. I first asked the LLM to explain the method in its own terms, and then I started prompting it one step at a time. I needed the most help on step 1, Understanding [your audience].

We had some idea of who our audience was, but LLM help allowed us to be more confident in our hypotheses, unearthed considerations we had not necessarily thought of, and ultimately helped us get the ball rolling on this project.

See, the first question a designer asks is: why do we even want a new homepage? What we knew was that our archived homepage had served us well, but was severely outdated due to how much we had grown in its 4 years existence. We also had an idea of the audiences that read us (4 in total), but the LLM was able to help us confirm this, give these cohorts names and create a more detailed persona for them than just "I guess some people like sources idk".

And it's not only about what to add to this new homepage, but also what to remove. What's important, and what's not important? Where does the important stuff go or not go? With Vision (the recognition of what an image depicts), we were able to put this new homepage to the test on the LLM once it was coded and ask it questions. We were also able to ask about accessibility practices that we were not necessarily familiar with.

Thus an LLM was a tool in the design process to help fill in the gaps and weak spots. In the process, I personally reinforced my understanding of the design thinking method and learned new things.

This new homepage serves our three different audiences equally, which is a delicate balance to strike.

Adding preferences for reader comfort

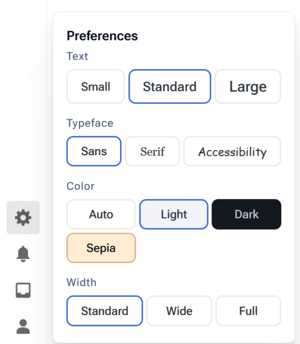

Another big addition that was made entirely by LLM this time is the many preferences in the Gear icon (on the sidebar).

By default, our theme (or skin as MediaWiki calls it) adds this gear icon and basic preferences: text size, page width, and light/dark color schemes.

I wanted to expand on this, because my philosophy when it comes to developing for ProleWiki is that every reader should be able to customize the experience for themselves. Websites should cater to the user and not the other way around.

With LLM help, we added new, easy-to-edit preferences: we added a typeface selector (currently sans-serif, serif, and accessibility fonts) as well as more color pickers: we have a sepia mode for something between light and dark mode, and in June offer an LGBT/trans theme for Pride Month.

In the backend, this is all added through javascript. We have an array that lets us add custom themes and fonts, and then in the CSS file we declare what our theme or font looks like. With this system, we can easily create more themes as we want and make them available for selection. This was not doable natively with our mediawiki theme and so we coded it in to fit our needs.

To achieve this with an LLM, I simply gave Deepseek the HTML structure of the preferences panel and explained what I wanted. I made sure to include that I wanted to be able to create new themes myself easily, and it came up with the array structure.

Reading mode

In a long-standing goal to improve the reading experience on ProleWiki, I have also deployed a reading mode toggle. It is currently in Alpha mode until we know where to put it, and you can enable it on desktop by pressing the 0 key (there is no toggle on phone as the website theme already handles it automatically).

This mode was entirely developed by Deepseek. I told it that I wanted to toggle a certain class on the <html> tag when the 0 key was pressed. Then, I went into the theme's CSS file myself and manually deleted the items I didn't want to show up. The original code had some errors (for example it could trigger while you are in the editor, i.e. while changing content on a page) but this was easily fixed by just notifying the LLM of the bug. Testing will happen regardless of what you code.

Of course the code is a bit more complex than just adding a class to an html tag. It needs to save this choice so that when you load a new page, your preferences also load (this is how I learned of the localstorage JS property). It also needs to properly refresh the saved preference if you switch from say sans-serif to serif: it needs to delete the sans-serif preference from localstorage and save the new serif preference. LLM handled this seamlessly by itself.

I learned CSS before LLMs were commercially available but dark and light modes were not widespread on websites back then, and often relied on more rudimentary techniques (such as creating a whole dark mode website). With LLM help, I now know how to make a dark theme on any website. I learned from a tutorial.

(Reading mode will soon be deployed in a more accessible way than a keyboard press - we figure there's no rush as it hasn't existed for the past 5 years without issues)

Simplifying Javascript code for performance

Ultimately, all of this adds a lot of javascript code to the website. It's not the most resource-intensive component by far (we still have images, PHP, and other stuff running as all websites do), but it's worth saving resources wherever we can.

When our custom Javascript file grew to a sufficient size, I endeavored to have it refactored by Deepseek to improve performance. I simply gave it the 1000 lines (and yes, Deepseek easily handles over 1000 lines of code in one prompt) and told it what I wanted. I also had to manually intervene in some areas.

By the end of the process, which took so little time that I didn't even make note of it, we went from over 1000 lines of code down to 600 - and much of it was reworking the logic of our scripts, not just removing comments and breaks. The JS file shed half of its original size. We also added checks so as not to run some scripts if some conditions were not met. That way, users on older or slower devices save on loading times and processing power.

Book splitter script

Still as part of the improved reader experience, we have started splitting our library books into subchapters. You can see the result on this book: Library:Vladimir Lenin/Materialism and empirio-criticism.

This is a python script that an LLM (Deepseek) coded entirely by itself. I won't lie, it was difficult. A lot of edge cases needed to be taken care of, a lot of stuff it assumed (because we didn't think to tell it about it) had to be rewritten, but in the end we got it working. This script can now be used on any library page and it will create a table of contents that can that leads to subpages containing the chapter. Then, all of this subcontent is called back on the main page so that readers can choose how they read: either by subchapter, or on the main page. Our philosophy is to give choices to the readers to best fit their preferences and LLM helps us achieve this.

Manual work still goes into such a project, of course. You can't just give the LLM one line and expect it to work from the start. Just like a human coder, you need to brief them on what you want and how you want it. In this case, I already knew some of what I wanted - the table of contents for example, transcluding chapter content back onto the main page of the book, and the oft-overlooked navigation arrows at the bottom of a chapter. The hard part for me was writing the script that does all this, so an LLM did it in a few hours.

Could a human dev do this script? Yes, of course. But they might not be able to do it as quickly, or in the way you want to. We have looked for developers of all kinds for years without much luck - alas, people also have preferences and jobs, and we can't ask them to put 40 hours a week towards ProleWiki for free. They might also want to do things in a way that they prefer, and not deliver exactly what we need but what they feel like delivering. This isn't a criticism but rather an important point to consider for LLMs. LLMs will give you the result you ask for, sometimes this is good and sometimes this is lacking. But, they won't judge you or refuse to work for you.

This type of volunteer project is a space in which using LLMs makes a lot of sense, as it allows us to deliver to our readers much faster and with minimal resources/investment, which we don't have in the first place.

Translating pages and works

We have used LLMs to translate pages and entire library works, though with mitigated results. A lot of prompting is required to get something close to the original. The quality is readable; it doesn't hallucinate, but the LLM might decide to shorten a sentence and won't always know to translate some technical terms the same way every time (because you feed it the book in chunks).

We looked previously at getting The CIA's Shining Path: Political Warfare professionally translated. Such a book might cost over 12,000 $ to translate by a professional - a sum we would never be able to find, even if we ran a fundraiser. We can also always redo the translation as models improve, and update it. In fact, this book was originally translated with what is now considered an obsolete model.

With this kind of tool, we are able to get a lot of theory that was previously only available in one language to the masses. It might not be the best translation ever, but it doesn't need to be if it's the first of its kind. Later, professional translators are free to do their own edition. At this stage however we are still refining our prompting and translation process to deliver more accurate results.

If we are able to get page translation to at least 90% accuracy then we are considering using local models and a script to automatically translate all of ProleWiki to other languages. This makes sense because a- we are not professional translators and might not do a better job than the LLM, b- it would take years for a team of volunteers to do this, but a script can run nonstop until it finishes its job and c- we mostly work on the English instance (it's sort of the main branch), so it has most of the content. Even editors who participate on other instances participate on English the most regardless.

Troubleshooting VPS problems

MediaWiki is a pretty big software. While it has some documentation weak spots, it's ultimately very powerful software that can do everything we need it to - some of it comes ready-made, and some of it we have to set up ourselves.

For this reason, we run MediaWiki on a virtual private server, or VPS. This means we are responsible for handling server load, vulnerabilities, and bugs that might appear.

There are some things I can't talk about as they are sensitive to our operation, but I have used LLMs as an assistant to fix VPS issues with great success. Of course, you shouldn't just trust the LLM when it comes to sensitive hardware management. Double-check both online and with someone else, and if you're not sure about something, don't do it. But troubleshooting is safe (for example running netstat), and LLM can help you with that. You can then just send it the log and it will find the issue.

Likewise it can help install new software. VPS don't come with anything beyond the absolute minimum, so we installed fail2ban ourselves to manage bot traffic. The LLM helped install and set it up with the rules I wanted it to have, and now I know how to do it without help. To be clear, the LLM only provided instructions on its online interface - I then read through them and manually followed the guide. At no point the AI connected to our VPS and did changes itself, this could be very dangerous.

Over time, I became more comfortable with our Linux distribution (which is entirely command-line based) too, and can now more or less manage a VPS myself. Previously, we only had one user who was able to manage the VPS: if they got sick or busy with life, we could do nothing if the website suddenly went offline or we needed to install something new.

Could you learn this from a google search? Yes, but: 1. it wouldn't necessarily fit your specific case and you'd have to make more searches to fully understand the problem, 2. what matters is that you learn from somewhere. Though even today, even as a CSS pro, I still routinely have to google some basic properties because I forget if I want align-content or align-items for my flexbox. Let's not pretend that before LLMs we all had perfect memory and got things right on the first try.

There is some stuff it LLMs have been unable to fix for us, but that's also stuff we couldn't fix ourselves. Some errors truly don't make any sense and shouldn't be happening, so I can't fault the LLM there. In most cases, a little bit of back-and-forth is all that's needed to find the problem and fix it.

More to come

I probably have more examples that I simply forgot, because using LLMs has become such an integrated part of our process on ProleWiki. We don't use them for writing content directly (although it can help with syntax and simplifying complicated sentences), because that's not really where they shine. But we use them a lot for technical aspects and, more importantly, to help fill our weak spots.