More languages

More actions

Our essays reflect only their author's point of view. We ask only that they respect our Principles.← Back to all essays | Author's essays Intellectual property in the times of AI

by CriticalResist

Published: 2025-11-07 (last update: 2026-02-05)

60-100 minutes

Commercial AI has existed for the past 3 years, kicked into gear by the release of chatGPT in November 2022. Prior to that, research into machine-learning and neural networks had existed for several decades, though they had trouble finding widespread use due to hardware limitations at the time. It was in the 2000s that "deep learning", the foundation to modern neural networks (which trains what becomes chatGPT, Midjourney, DeepSeek, and all the famous names), became a reality.

Regardless of one's feelings on the matter, we can clearly say that AI has indeed had an impact on society because it never fails to provoke heated arguments. And not only that, but the arrival of commercial models, that is, models that can be run either in your browser (on a cloud service) or on your own machine (local) -- and not just in university labs anymore -- seems to have shifted perspectives entirely. What becomes bothersome is that self-proclaimed communists seem to have done a complete 180 on intellectual property, and this is what we want to focus on in this essay.

Some definitions first: by AI, I mean modern neural networks specifically -- which includes but is not limited to generative AI such as Large Language Models or image generation models. By art, I mean any of the established art forms: painting (i.e. illustration), sculpture, literature, architecture, film, music, etc. A lot of the discourse around AI and IP (intellectual property) revolves around illustrative output specifically, which completely ignores film, literature and music. We will be using these definitions implicitly throughout the essay.

The five arguments of anti-AI

There are many, many things to discuss about AI (both good and bad!), and we'll get into them. But most of the time, anti-AI discussions revolve around five arguments:

- It's a bubble,

- You stop using your brain,

- It's not actually art/it looks bad,

- It's bad for the environment,

- It destroys jobs.

Making any discussion outside of these five hot topics impossible. I know that by pointing out these arguments and responding to them I will invite discussions on them when my goal here is to talk about intellectual property, but I feel I couldn't properly talk about IP laws if I didn't address these arguments first. This is because at its core, these arguments come from a place of not wanting to actually struggle with the problem. Rather, some people seem to think that if they ignore AI (the ostrich technique) or deride it and its perceived proponents enough, it will suddenly go away.

But clearly AI is not going away - you can't put the toothpaste back in the tube. This isn't a crypto or NFT situation, this is something that over a billion people have used in their daily life already, and keep adopting. And not only that, but there is a very overlooked open-source ecosystem that goes far beyond what the proprietary likes of openAI or Midjourney offer. Even if you were somehow able to stop AI development completely (which would also mean stopping research into neural networks, including research on medical, industrial or agricultural applications aka stuff that can make our lives better), what would happen to the models living on people's machines right now? They will still exist and still be usable. Open civitai or huggingface right now and look at all the new models coming out every hour of the day, all working on slightly different principles to try and push the limit of what neural models are capable of; and think that this has been going on for 3 years straight and shows no sign of stopping!

Therefore as communists we must struggle with the topic, no matter how uncomfortable it may be, to find its dialectic and resolve the contradictions, from which will emerge a third new thing that did not exist previously. As communists we have had to struggle with many things before, and we will continue to do so. We can't abdicate as soon as something is too difficult to wrap our head around, because our goal is to demystify the world. We don't have the luxury of saying "I'm just not that interested in that" because the bourgeoisie isn't saying this. In fact, they're very happy that we refuse to level the playing field.

It's a bubble

Yes, AI in the West is a bubble. It's similar to the dot com boom of the early 2000s: huge, risky investment for a potential future payoff that does not seem to be coming. But overvaluation is not new, we have historical examples to analyze and we see that after the dot com bust, the Internet did not go away. Rather what happened was that some companies disappeared, and their property, talent and infrastructure was acquired by what are now today's giants: Amazon, Google (now Alphabet), eBay, Cisco, Nvidia and Oracle. They acquired talent and property on the cheap during the bust phase and 20 years later decide for us what goes on and where on the Internet -- Amazon and Google especially. Fun fact: Amazon's real money-maker is not their online store, it's their Cloud service named AWS. It hosts over 1 in 20 websites (5% of the entire Internet) by some estimates, most of them big household names.

The boom and bust cycle was clearly explained by Marx. A new market emerges, capital floods it and tons of new companies open (the boom phase), but then overproduction lowers the rate of profit: a sizeable portion of the companies that opened during the boom go bankrupt and we enter the bust phase. But an equally important part of Marx's analysis is that not all companies go bankrupt in that phase. Those that remain solidify their position in the market, and become monopolies. The boom and bust cycle doesn't just explain economic crises but also the tendency of capitalism to form monopolies.

The belief that AI will go away after the bubble pops is supported neither by theory nor by historical examples. Not only will open-source models still exist and be available after the bust, not only will research into neural networks still take place (by very skilled engineers might I add, who are often completely ignored in the conversation), but what will happen is AI will get consolidated into fewer companies. And yes, I do believe AI in the West is an overinflated bubble, predicated on capitalism's own contradictions (the inherent 'anarchy of production' as Engels said and the incessant need to open up new markets because of the tendency for the rate of profit to fall): they are trying to make it profitable and pumping money into it to get a return on investment. But as Chinese models show, AI is actually not that big of a market; it's overvalued.

When Deepseek-r1 came out in January of this year, it completely destroyed the western model of the AI market. Deepseek is a smaller model that came out of nowhere (the parent company is a trading company, so not in AI or even a "tech" giant originally - though they are a giant of trading in China), trained on less than 100 million dollars versus the 1 billion USD openAI swears high and low it absolutely needs to do literally anything with. And yet, it delivers similar if not better results in its generations. Suddenly, the investment into Western companies doesn't seem viable and people, both investors and users, are realizing they got taken in by capitalist promises.

What we need to do when it comes to the AI bubble is placing it in its proper theoretical context. Personally I'm not even sure what the people who hope for the bubble to burst actually expect to happen afterwards. A part seems to believe that all AI will suddenly vanish because openAI will close down, while another part expects that it will...? I truthfully don't know. Like I said, it's a simple argument to end all conversation: the bubble will burst, so just wait for it. Nothing else needs to be said.

But things do need to be said. What will we do when this bubble crashes the economy? It's not just tech companies investing in AI, it's pension funds, banks (using your money), your employer and even school boards! Everyone that has some cash lying around basically. We are gearing up for a 2008 crisis, if not worse. Instead of wishfully thinking that all our problems will get solved by the contradictions of capitalism, the time to organize the workers and prepare for the crisis is now, before it actually happens.

And when we see that a company like Microsoft (i.e. legacy tech giants) is buying up shares in openAI to the point that they now own over 27% of the company,[1] it seems pretty clear to me why they're not waiting for the bubble to burst to buy at cheaper prices: when openAI goes bust (because their only product is AI and they survive on investor money), it will get carved up in liquidation, and Microsoft will be right there to appropriate everything they can: the talent, the infrastructure, the LLM models, and so on. A process of monopolization will happen and established companies are gearing up for it.

In other words, ChatGPT might disappear, but it'll resurface under the name of Copilot.

Microsoft is not the only one. Google (Alphabet) is buying up AI startups, though this process is not new for them. It lets them get rid of competition before it even has a chance to get off the ground and consolidate their own AI product. Salesforce is investing in Anthropic, the makers of Claude.

And I'm not sure what will happen in the AI ecosystem after the bubble bursts either, but I know that it will happen whether I want it to or not. I can't will it out of existence. What I do know is that some monopolization will occur in the West while China will continue its research, but I'm not sure what this shift will mean materially. It's too early to tell until the moment it happens. What we need to do is prepare and organize now before it strikes.

You stop using your brain

My parents and teachers also said TV would fry my brain and we shouldn't have a calculator in our pockets all the time. What we found instead is that people adapt to new conditions, and have done so for millennia. Do I also stop using my brain when I use a lighter to make a campfire instead of rubbing sticks together? And, is knowing how to make friction fire a required skill in the 21st century? The example is a bit far-fetched, but it's how society advances. Skills come and go when they are no longer socially necessary (taking here after the socially necessary labor time). Who could say today they know how to use a mechanical loom, or could drive a steam locomotive? And yet, our ancestors who did run the steam locomotives would likewise be completely lost if we put them in a modern train cabin.

Many arguments brought against AI today have been made historically, and found insufficient by the passage of time. Some people were also opposed to electrification when it came out -- though a lot of it was spurred by the gas lobby. What we found instead is that gas companies started getting into the electricity market when the writing was on the wall. Today, anyone saying they would rather go back to gas lightning over LED lightbulbs would not be taken seriously. Is AI the modern electric lightbulb? Not necessarily, but maybe? It is clear however that having a kneejerk reaction to it, in either direction, will not seriously answer the question: both from the capitalists that swear it will do everything (LLMs clearly don't and neural networks in general still need more research done into them to make more uses viable), and from the comrades that think it does nothing whatsoever -- we use it a lot to code front-end stuff on ProleWiki which improves the reading experience of our readers, to name just one usecase. If you use a custom font or the sepia theme while reading this essay, you are using code written by AI (and if you didn't know you could customize your reading experience, tap the gear icon on the sidebar and try out the options!)

What matters is intent and having the tools to realize that intent. What we've found through our usage of AI is that it doesn't replace thinking but makes it happen differently, at a different level. It's like hiring a developer: you still have to know what you want and you have to be able to communicate that -- which is a skill you learn when using AI. And if you've ever worked with clients, you know how difficult that is (they made it into a whole job, Product Managers). People adapt, and I don't believe current studies that are trying to sound the alarm about AI are taking this into account; and yes, I've read them. A lot of them compare AI to established methods like using search engines, but search engines were not easily adopted when they came out. Since then, we have learned not to memorize stuff so much but to know how to look for it when we need to access it. This isn't just me bullshitting, this was tested in a study from May of this year.[2] Google became a memorybank, but the problem with Google is they can change the algorithm and the product at any time, and you lose that memorybank at their sole discretion. OpenAI did the same thing: many people used GPT-o4 a certain way, with a certain custom prompt. Them, OAI decided to retire o4 and force everyone to use 4 and 5 (forgive me if I mix up the model names). Overnight, thousands of users lost what they had built, because this is at the end of the day all proprietary software that someone else controls for us.

The whole "this new tech is the end of civilization" trope is overplayed. It's overplayed in our media, and in real life too.

It's not like we didn't have slop before AI either. The majority of Youtube video essays are objectively slop, if we want to use the term. They are videos you put on in the background while doing something else, designed to be easy to listen to, with a title designed to get you to click on it. No AI is needed to make yet another "How [show or video game] gets away with LYING to you". Whenever a new indie game becomes popular, you can expect 100 copies of the formula to appear on Steam because they have stopped doing quality control. The music industry has been putting out slop for as long as it's existed - creating bands out of thin air with personalities designed to make you identify with them, their lyrics and melody written by the same 20 people who have been doing this work all their life. It doesn't matter if Rihanna or Beyoncé is singing the song, it was written by some Swedish guy in his 50s either way. (Also forgive me for not keeping up with who the current stars are, I clocked out after 2009.)

In the same way, I don't like using the word Luddite. The luddites were a historical movement that do not exist in our current conditions: it's an easy cop-out to not have to make a point. But we need to make arguments, and we need to make them sooner rather than later. But to do that, we need to abandon kneejerk reactions and treat our comrades on an equal level, and one side is more guilty of this than the other. In fact, I already expect many of these reactions to this essay. What I ask for is not necessarily that you become pro-AI and wear "I heart AI" t-shirts today, but that you treat comrades on an equal level and listen to their arguments. And, if you want to remain ambivalent on AI and don't feel like learning how AI works down to the details, then be principled enough to keep it to yourself and let other communists who do want to learn how it works do it for you, and report their results and usecases. Work collectively, from each according to their abilities. Take the good and discard the bad.

AI art looks bad

So did the first human cave paintings. So do my own illustrations. I use art to mean all art forms, but this argument is usually reserved for illustrative work specifically. But focusing on illustrative art (painting, drawing etc) is a trap; it creates a microcosm that prevents us from looking at the big picture. In fact, generative AI may be able to help circle around IP laws. The actual effect it will have is difficult to predict and we don't have any hope of capitalism doing the right thing, of course, but to me this is a much more important area to focus on. Yes AI art may look bad, and yes it may not be art, but then what? What has this argument changed in the past 3 years it's been used aside from getting some approving nods in certain circles? Facebook is rife with terrible AI images passed off as real because they opened up monetization a few years ago, and now everyone is trying to post as often as possible to get as many impressions as possible to make as much money as possible. Models are getting better and better at generating images, to the point that some look absolutely lifelike. Clearly, the technology is improving, not stagnating. And it will continue to improve no matter how much you might say that it looks bad. AI engineers just don't care about this argument because their point of view is that they will solve this problem if they keep working at it, so clearly if the point is to convince people, it's not an effective argument to use.

This is an image generated by a local, open-source model using only my own GPU:

Does it have artefacts? Yes, probably. The text probably makes no sense and I'm sure looking deep enough into it you might notice some things that are not natural. There's not really a background and the grain of the table looks a bit fake. But it also looks very realistic, and this is where we're at now - all generated on my own GPU. 3 years ago image models could not even get books right.

This is what you have to contend with if you want to remain anti-AI, and it will give you much stronger arguments than simply saying "it looks bad" as if that was a given outside of specific circles that already agree with this opinion. The ecosystem is developing and moving with or without you, and with or without me too! Even if we were to ban AI, even if we were to break up openAI, Grok, Gemini, and whatever else exists commercially, even if we stopped all research into AI, even if one thinks 40 seconds to generate this book cover on a wooden tabletop is simply outrageous, we will not be able to remove this model from thousands of people's machines. It will be able to keep creating images like these for years to come.

On the question of art

My point here is that this is the reality we are now in, and arguments about quality have never stopped anyone, because what counts as quality to you may not be so important to someone else. I come from a graphic design background, and when I was in school spec work was all the rage to rally against -- spec work is the practice of a client submitting a design request for which designers submit their work, the client then picks one winner and pays them while the other participants leave with nothing, having performed free labor. For all our posturing, arguing and debating against spec work, we did not stop a single contract from being signed. How could that be? It was because there was no real organizing. It was the futile kneejerk reaction of a profession that felt threatened by something they could not stop. Instead of yelling at people to not do spec work and pay money they did not have for a designer they could not afford, designers should have found better arguments and ways to work around spec work: what can a designer provide that spec work can't?

And not all illustrative work is meant to look good. Some is just meant to accompany a blog post, or fill up space in a PowerPoint. Not all illustrative work is art, plainly speaking. First of all because there cannot be true art in capitalism (its nature as a commodity to be bought and sold will always overtake the artistic aspect), and secondly because not everything is inherently art on virtue of being drawn. As a graphic designer, I never thought that the logos and designs I made were art in any way: they exist to convey a message, usually a marketing one, or solve a problem. We make logos, flyers and videos to get you to buy something or click on a button, not to hang on your wall and admire. This is why I separate illustrative work from art; not all illustrative work is inherently art.

As a final point to this admittedly long section: I also want to say that there are many, many things happening outside of image generation, and they're not just theoretical; they're happening right now. AI is getting much better at coding every semester, to the point that it can now reliably make you apps without learning to code. It allows everyone, including organizations, to start coding scripts and internal apps for their needs. There are open-source agentic clients for it, that will make the AI read your codebase and keep track of what needs to be done, and then do and redo it until it works right. I have built an entire pipeline in python to translate ProleWiki English (or any book) to any language using Crush, which is an agentic interface.[3] And that's just one small part of what goes on in the open-source community, and has 0 overlap with image gen. My point is: keep looking at the big picture, don't get absorbed into the microscope. Examine things in their totality.

It's bad for the environment

All of the above leads me to answer the environmental aspect: first, there is currently a lot of research being done to severely reduce the environmental impact needed to train and run AI. Just recently, Alibaba (who trains Qwen) found an algorithm to squeeze more models on GPUs, reducing the amount of graphics cards needed by 82% in their datacenter.[4] Secondly, once the model is made, these 40 seconds of my GPU being used to make a picture amount to literally 0 watts consumed - inference runs at a constant 65W of GPU power on my machine, which is less wattage than when playing a video game. Open-source AI is clearly the way forward, as all software is, and this is just one more reason in favor of it.

Speaking of, we will come back to Deepseek but the full model is open-weight (soon to be open-sourced) and you can build a 2000$ machine to run it entirely locally.[5] It's the same model as on chat.deepseek.com except it's not censored and the entire machine only consumes 260 watts while running inference (a washing machine cycle consumes well over 1000). With new innovations it is very likely that soon everyone will be able to run these models on devices they already own - or at least, this is where we ought to be heading and why Chinese models are so important. If we lived in a world where only the US was capable of producing models, then we would indeed be in deep trouble.

We have to be careful with how we wield environmental arguments because they can very easily be used against the working class. Watching videos on Youtube is also bad for the environment: Youtube hosts centuries worth of footage, and they need datacenters not only to store all of those videos, but also to serve them to you quickly on a web page. A few years ago, it was all the rage on Linkedin to rant about the climate impact of Youtube, and predictably Linkedin's best answer was: "stop watching Youtube, or if you do then feel bad for doing it". When the argument amounts to nothing else than "AI bad for the environment," then the only logical conclusion is that everything is bad for the environment and therefore we shouldn't have anything. No videos to take your mind off work when you come home tired, no Internet to read books and talk to other communists either. This is not only undialectical, but also anti-civilization.

Technology saves lives

If we were to focus on how datacenters operate currently however, then we could argue for better laws to protect the environment while still retaining data centers. This is something capitalism is very bad at doing (see the Paris Accords), and gives us an angle of attack to organize under. Capitalism will not save the climate, so we need to move beyond capitalism. It's not just the datacenters, it's the whole system. Of course openAI and Google don't care about the environment, there's no mechanism that forces them to care! What matters is making these mechanisms, and then I guarantee we will make sustainable AI in 3 years time. And not just sustainable AI, but a sustainable planet that doesn't sacrifice our lives. I called to anti-civilization in the previous paragraph; a lot of people are anti-civilization because they think degrowth is desirable. But there is one very solid argument against any sort of degrowth: saving lives. If you've ever had a health scare that didn't turn into a health tragedy in your life, it's because we had the technology to save your life.

China is already using remote controlled quadcopters to save people from floods.[6] Billions of people need medication daily to stay alive: before the medication existed, people simply died. AI is, at this moment, being used in medical applications to help save people's lives.[7] It's just that capitalism is not very good at doing this because it creates more investor interest to be able to say you have however many million users on your platform, and you get them on your platform by advertising things like a chatbot friend or assistant that can provide advice (even if that advice is hallucinated).

What we need, clearly, is socialism. We have to look at things in their totality and not laser-focus on the specifics. And looking at the totality of the AI problem, we see it boils down to capitalism over and over again, every time. The contradiction is not technology vs no technology, it's the class struggle.

The problem with environmentalism in the West is that we want solutions that don't exist yet, and in the process we take a hard "retvrn" stance and throw the Global South under the bus. If we don't have a perfect solution to a problem right now, then we presume no solution must exist. China on the other hand is investing heavily in solar (they are adding the entire US grid worth of solar every 18 months)[8] but in the meantime, they are also using coal. To them coal is not inherently bad or dirty, it's just an obsolete method of producing energy now that there are better alternatives. But, until they can get the alternatives up and running, they will use coal. I'm not saying the west should use more coal (and we are by far the biggest contributors to climate change), but that we can make solutions if given the possibility to make them, they just won't happen immediately.

There was a similar uproar against 5G when the towers were first being built. Your mileage may vary but everyone I talked to was unequivocally against 5G. They felt 4G was more than enough, and there was an entire thing about how the "microwaves" were going to roast our brain or something. And sure, you could argue 4G is more than enough for browsing the web. But today with 5G, China is performing remote surgery from thousands of miles away.[9] This technology is saving lives -- 4G has too much latency and fiber optic can't be laid over a 4000km stretch to make surgery possible. And all this tech isn't just for surgery, it's simplifying the entire healthcare process and freeing up doctors' time so they can fit in more patients in their day. A specialist doesn't have to take a plane to and back to perform surgery, he can just load up the device from his location. Now China is studying and deploying 6G while in the West we're signing petitions to stop 5G towers from being built.

That single event, seeing that it was possible to perform surgery (and other life-saving applications) with 5G, instantly made me flip on my stance.

Besides, how do we decide what is necessary or not? You might say this or that new medication is not necessary because there's already an older one, and I might say that having air conditioning in the summer is unnecessary because I live in Siberia. What I mean is that, once again, we have to look at things in their totality before their specifics. Nothing is inherently "unnecessary" or "a waste", and once we properly place them back in their context and analyze their dialectics we have a clearer picture. To unequivocally condemn AI as bad because of some specifics is not even half the battle - the question isn't "is this good or bad" it's "what does this achieve? How can we seize on this?"

On the environmental impact

Furthermore, we are just not sure what environmental impact AI is having at the moment. This is the case for many industries: it's hard to get an exact consumption estimate. This is another reason why I promote a solution focused on the totality: environmental problems are not due to such or such tech, they're a policy problem. We have, in the West, an energy policy that is completely at odds with what we need and what we could have -- see China adding a whole energy grid's worth of solar every 18 months. Instead of allowing cheap Chinese solar panels into our markets however, we tariff them to hell and back so that people don't buy them and instead rely on overpriced local panels. How come China is at the forefront of AI innovation with only ~530 datacenters[10] for 1.5 billion people, while the US has over 5400 centers[11] for a population of ~330 million, or only 0.25x China's? That is the question one needs to answer if they are concerned about the climate impact of datacenters. I won't do it for you - there is some homework involved!

There is a real concern about datacenters Elon Musk built around Memphis (a predominantly Black city) and how they contribute to local pollution, in the air and the water. And this isn't just CO2 (GHG) pollution. This is true. What is always missing from the discussion however is that this pollution is driven by the centers' gas turbines which power their energy demand. Normally, federal approval is required for even one turbine, and they are only deployed in crisis situations, temporarily. Musk did not want to wait for official decision and to be connected to the grid, and so he installed turbines without asking anyone.

I'm not here to excuse dangerous choices followed by capitalists. That the government is abandoning the people in these communities so a billionaire can build a datacenter is also a problem to be addressed. The problem here is not the datacenter itself, but how it is being powered. In China, they are powering their centers with solar energy and gravity batteries.[12] But that's beside the point; the crux of the matter is that if it wasn't an AI cloud datacenter, it would be something else. Again, the problem is capitalism, not AI. AI did not invent pollution -- far from it when you consider PFAS, heavy metals in the water, and other non-GHG [greenhouse gasses] pollution. Overall, its contribution to water usage and emissions is, if the studies are to be believed, very small. Golf courses in the US alone require more water to maintain than AI does, by far.

I could make the point that the average person in the US consumes about as much water in a year as it takes to produce 14-22 hamburgers (660 gallons of water for a third of a pound of meat, versus 9500-14000 gallons per year per person in the US = 14 to 22 burgers). That's really not a lot of burgers compared to an entire year's worth of human consumption. If that person were to eat only burgers for lunch and dinner, it would take them barely a week to reach the threshold.

Meat production, at least in the West -- I am not familiar enough with the counter-examples to speak on them -- is the most energy-intensive industry we have. And the US Army is the biggest polluter. But still we are still told to recycle our little water bottles and leave the lid on the pot while boiling water to conserve energy.

While I could make this argument, I would be going against my own point if I said that there was no way whatsoever to reduce water consumption in animal farming. However, I do think that people who are currently ringing the alarm bell about the theoretical energy/water consumption of AI would do better to first examine their own consumption patterns and apply that to the big picture. If you think other people using AI (as opposed to companies not being forced to improve, which of course we know is a pipe dream in capitalism and thus we need socialism) is the real problem, then the objectively best solution is to become vegan first. Then cut out air conditioning, then cut out the car, then cut out devices other than a rudimentary mobile phone -- the list never ends. And while we scold each other and pride ourselves on how little we consume, BP commits another oceanic oil spill.

This is acceptable from liberals, but as communists we know that systemic change is the only real way to produce lasting change. Such arguments are a catch-22: you will not find the perfect consumer, everyone is "failing" somewhere, and the idea of being a responsible green citizen was created by fossil fuel companies in the first place to push the responsibility for climate change onto the consumer rather than their own patterns.

In short: we need societal policy change, not just symptom treatment. There is no ethical consumption in capitalism for a reason. We understand cars are a contributor to CO2 emissions and pollution, but we also understand it's not as simple as snapping our fingers and willing a better solution in existence by magic. We don't go up to workers driving to work berating them for using a car, and have never done so, for a reason.

It destroys jobs

Much like the environmental angle, the argument shouldn't be "destroy AI to preserve jobs" -- capitalism nurtures an underclass of unemployed workers regardless, and if we really wanted to preserve jobs then we are essentially arguing for going back to the age of subsistence farming, where you could die of starvation if your harvest was unfavorable, and you wore the same clothes until the threads literally dissolved, but at least you had a "job" (working the farm from sunrise to sunset). What I mean is, just having a job is not an end in itself. What we want is to be freed from work, and until then perform unalienated labor. This labor will necessarily have to benefit the proletariat if we want it to become automated to our benefit.

Interestingly, when electrification came to Sweden and industrial jobs were being cut due to the productivity gains, the demand of striking workers was not to undo electrification and rehire, but to argue for better pay. The argument was, if you're going to make me do the work of 10 people, then you can afford to pay me 10x more. This is a much stronger angle because it's universal to every worker: we work more and we take on more responsibilities, so our boss can clearly afford pay us more. It speaks to everyone, except to the business owner who is being told "you won't actually make more money off our backs by doing this, not if we can help it". The case in point is that illustrators didn't think their work would ever get automated (or if they did they didn't think it would happen so soon), and when it came time for it they had nothing organized against it. But it's not unique to illustrators, it's just the latest example. Everybody thinks they're irreplaceable but capitalism's essence is that everyone, individually, is replaceable. The only thing that cannot be replaced is the proletariat as a class.

The effects of AI on the job market however are not so clear-cut. Many companies who were initially taken in by the promises of tech companies to replace their workforce with AI are now walking it back and rehiring, while others are opening up jobs in other areas to make use of AI-assisted human labor. At this time, it is too early to tell what permanent effect AI will have on jobs, and I don't put much faith in "predictions" that claim these or those 100 jobs will disappear by the year 2030 or whenever.

Regardless, we already have the solution to all of these problems: socialism. On ProleWiki, everything we do is in service to the reader. Our design and development philosophy is reader-first, and includes making the website more customizable and generally more able to answer your needs. We don't need you to spend several minutes trying to find the page you're looking for if this would allow us to serve more ads (cough cough Google Search); we just take you to the page you're looking for. We don't serve ads, we don't ask for your money, and we don't need to maximize your time spent on our pages to please investors that don't exist. Our use of AI on ProleWiki is completely different than how it's used by capitalists. And yet, one cannot excise themselves from capitalism no more than forming a commune in the woods is Actually Existing Socialism: open-source models, as interesting as they are, often depend on proprietary models for their training. Capitalist companies themselves make heavy use of open-source code which they reuse for free, and then extinguish once they have milked it dry, becoming the sole provider of a service you now have to pay to use.

Yet, this shows that AI does have a place in socialism and communism. Maybe not a society-transforming place, but a place nonetheless. As AliBaba Cloud's Founder Wang Jian said, AI changes the way they think about and approach problems, it's one more tool in their arsenal to solve problems with.[13] This does follow in the idea that AI is not the "fifth industrial revolution" or a "paradigm shift" like its hardest proponents believe, but it also shows that it has legitimate usecases and will continue to have them, it's not merely a "toy" or "slop" like the other side claims.

Capitalism destroys anything good before it even has a chance to start, but that is not new. In AI research there are very smart and skilled people who want to do good things, only to find their research misappropriated to squeeze just a little bit more profits out of this late-stage capitalism hellscape we live in.

AI and intellectual property

As previously mentioned, Chinese models are accelerating the death warrant for overvalued western tech companies. Project Stargate, the $500 billion AI infrastructure initiative launched by U.S. president Trump in January this year died before it even got off the ground when only two weeks after its announcement, DeepSeek came out and needed only 100 million dollars and spare GPU power to train a model that rivaled the top commercial Western models. Incidentally, DeepSeek is completely open-source -- as are most Chinese models, though not all -- and they contribute a lot to open software development. This has repercussions not just in AI but in all software, because software uses and builds upon what already exists, and this will include AI software too.

On the necessary baby steps of new tech

Let's talk about training AI. I would ask a question: It is often remarked (and not wrongfully) that we want AI to do our chores so we can focus on our art, but it turned out AI is doing our art so we can do our chores. And yet, how is AI supposed to do our laundry if it can't recognize a laundry basket and use your laundry machine? How will the dirty laundry find itself in the washing machine, and the program started?

To achieve tasks like these, we need to train AI to have vision (a way to understand what an image depicts, either a photograph or a frame from a video feed), and this vision must be trained - just like ours. We didn't come into this world knowing what a laundry basket was, someone had to teach us. We do the same with AI. We teach it to recognize a laundry basket and dirty laundry from clean, and this requires that it has access to enough training data on laundry baskets, but also forks, knives, cats, basically everything that exists in this world that we take for granted.

Will we ever have AI do our chores in capitalism? Not if it's not profitable (which is looking increasingly likely as the bust phase approaches). But what we wanted as consumers was magical AI, the kind that we saw in movies of the 2000s (I, Robot, The Iron Giant, Wall-E and so on) that somehow works, but not on any scientific i.e. real world principles. How does wall-e navigate his environment? Who knows! But now that we have something that may get us there -- but might also still need a bit of research to improve its foundation, I'm talking about neural networks -- we don't want it anymore. That's not because we don't like innovation, but because we don't trust capitalism to deliver on the promises.

Meanwhile, Chinese company Unitree is releasing humanoid robots priced between 10 and 70 thousand dollars (!!) which you can get delivered to your doorstep today. Right now the industry is in its very early stage, and these robots come only with an SDK, that is, it has no functions or code within it but only the capability to run what you program (and you can easily use AI to program this robot for you, too). If you want your Unitree bot to go get something from the fridge for example, you will have to figure that out yourself from scratch -- there is no "get.beer.fridge()" function built into it. These are more like skeletons, from an industry that is only just getting its start.

But it's very promising if only because the best Western tech can offer is a clickety-clack piece of plastic that will start at $200,000 and hasn't left the lab yet, I have named: Tesla's Optimus.[14] We certainly are cheering for China to win this tech race, but that's not how China sees it. They're building humanoid robots for the benefit of the people, and to want to stop building robots in the West because we don't like them means only China will have them and means we will only be sabotaging ourselves. Revolutionary defeatism means working for the defeat of one's government. By all means we should work to stop our governments from using AI in warfare and surveillance, but this is not AI as a whole.

Research often fully pays off only years later. Maybe we'll find out humanoid robots are not that good at doing chores and we'll end up making a flatbed robot that carries your laundry basket to an Internet-connected washing machine that automatically turns on through the Wifi because that'll be more efficient and cost-effective (although that robot will have to know how to navigate doors and stairs), but it has to start somewhere is my point.

It's not uncommon for new marxists to ask how chores will be socialized in communism (in their process of understanding for themselves how labor can be organized differently): will we have people whose job it is to do chores, or will we still have our individual laundry machines and vacuum cleaners? And what about at the office, will workers be responsible for vacuuming and emptying the trash in their own workplaces?

Well, wonder no more. We will have robots that take our dirty laundry off our hands and handle it for us. Again, this isn't science-fiction. This man in the United States bought a 70,000 USD Unitree robot and is programming it to do things around the house as we speak: https://www.youtube.com/watch?v=pPTo62O__CU&list=PLQVvvaa0QuDdNJ7QbjYeDaQd6g5vfR8km. And as for industrial settings, China has built its first "dark" factories, factories that are 100% automated and therefore don't need to keep the lights on -- and can also work 24/7.[15] These are not entirely new manufacturing robots and they don't need vision capabilities, but they make heavy use of AI within these spaces to keep up with production and not have to send human techs to do maintenance. By the way, these factories are building the robots that will be used in more dark factories - it's getting recursive.

On the nuclear exit in Europe

The second thing the discourse generated by AI robots reminds me of is the nuclear issue, which is another hot topic in Europe. Europe has basically decided to abandon nuclear without having anything to replace it with -- once again, we are rejecting an existing solution, wanting to immediately replace it with something that does not exist yet. Germany, once a big producer of nuclear energy, has reopened coal plants to satisfy demand. There is now a huge energy crisis looming in Europe. Most people's argument against nuclear is that it's not safe enough, but no energy source is - in fact, nuclear is actually the safest energy source when comparing yearly deaths (coal doesn't come out of thin air!) Another reason nuclear is not "safe" is because we stopped doing research in nuclear tech in the 70s, and our plants in Europe are from that era. But with more research, much of which is done in Iran, Russia and China, we see that we can make nuclear energy more efficient and safer.

There will come a point where we will be able to recycle 99.9999% of waste, or perhaps even open fusion plants which make 0 waste (China is already doing lab tests on fusion reactors and yes, they work). Modern reactors are essentially already meltdown-proof, and the Fukushima disaster happened because it was built right next to the sea in a country known for earthquakes and tsunamis, because it was cheaper than pumping the seawater inland. The reactor chamber worked as intended and was preventing a meltdown, but the tsunami flooded the chamber and interfered with the system. The water thus superheated, making the reactor unable to dissipate heat, and we know how the rest played out.

Profits above people. If the plant had been built further inland on a hill far from tsunamis, no disaster would have ever happened. The problem wasn't nuclear but capitalist policies.

Nuclear is one example, and AI is another. With more research into AI, who knows what will come out of it? And not only as innovations, but as future inventions. In the same way we needed the radio to invent the TV, we will need neural networks to exist to invent something else, something that I obviously can't predict.

But this is exactly what intellectual property laws don't want to happen. IP holders don't want to make safe nuclear reactors or AI that does your chores, they want to make money.

On the IP lawsuits and our place in relation to them

What prompted me to write this essay is that I see many people in anti-AI circles cheer when big names like George R.R. Martin, Studio Ghibli, Shueisha (publisher of Shonen Jump magazine in which many famous series like One Piece, Naruto or Dragon Ball originated) or even Disney sue AI companies over perceived copyright infringement. Disney, the company that made its success on adapting old folk stories and then copyrighting them.

And this isn't just the liberals and the apolitical kind cheering for the strong arm of the law; many self-proclaimed communists also rally behind these lawsuits that they hope will take down AI, even accepting some adverse effects in the process if it means the end of AI -- which, as we have seen, due to the prevalence of open-source AI is simply a pipe dream. Even if these IP companies won their lawsuits, the models on people's machine would still exist and be usable. It's like trying to remotely delete a film you have downloaded to your hard drive.

In just 3 short years, we have gone from "abolish private property" to "actually maybe some copyright is good". But copyright law was never made to protect the small artist against unauthorized use of their work. That is not its purpose. In every epoch, the ruling ideas are the ideas of the ruling class - in other words, bourgeois law protects the bourgeoisie, starting all the way back to the first copyright laws down to the quagmire we are in today, where Disney has extensively lobbied to extend copyright to 75 years after the author's death just so they could keep making money off Mickey Mouse. In fact, Steamboat Willie, the 1928 short starring an early Mickey Mouse, finally entered the public domain on January 1st, 2024 - almost one hundred years after its creation. What money there was to be made on a 100-year-old animated short is up to anyone's guess but if they own it, they're not gonna give it away for free. And you can thank Disney for all of this.

It reminds me of the old commercial, "You wouldn't steal a car",[16] the anti-piracy ad ran by the Motion Picture Association that tried to associate piracy with some sort of moral failing or crime on par with grand theft auto. It was instantly retconned by the masses as "you wouldn't download a car" when the ad came out in 2004, because stealing is stealing and downloading is downloading (downloading creates a copy, stealing removes the original). We went from "I totally would download a car if I could" to "no sir I wouldn't because it might be used to train AI" -- and this is in fact an argument these lobbies are using right now to get you to not pirate the proverbial car! We are sabotaging ourselves just so we don't have to hand anything over to the AI boogeyman. But as communists we are currently not in a position of power, we can't afford to be picky because of some moral principles; we must be, well, Marxists. I know there's a lot of coincidences in this essay, but it's interesting to note that the MPA, RIAA and other big-business lobbies were the ones who associated the act of copying with stealing in the first place as today a lot of artists bemoan that their art is 'stolen' to train AI, repeating RIAA verbiage.

When OpenAI introduced the Ghibli style to its image model Dall-e, people felt it as a direct attack on themselves because they identify so much with the Ghibli style, despite that the Ghibli style neither belongs to the people (legally) nor was invented by the people. It was invented by a company, the joint-stock Studio Ghibli company (incorporated), of which Hayao Miyazaki is the co-founder - therefore he of course has an interest in protecting what he considers to be Ghibli's style, so he can become its sole provider. The art style was cultivated to be commercially viable and sell merchandise. Case in point, Ghibli sold officially-licensed Grave of the Fireflies tin cans of hard candy in the 1980s as a tie-in to a movie about war orphans surviving the firebombing of Kobe.[17] It's also often pointed out when this topic comes up that Miyazaki is against AI, as if he was a thought leader on art or AI or had the same class interests we do. He's a business owner and thinks about business first, because no matter how well-intentioned he might be, no business means no way to tell the stories he wants to tell. Furthermore, it should be pointed out the famous video of him berating a group of developers for showing him "AI" dates back from 2015 and was showcasing procedural generation, not AI as we understand it to be today (LLMs or image generation).

One can like Ghibli vibes if they want (which is not limited to just the illustrative work but the entire movie package, with the music, animation etc) but the point is that it's an aesthetic born under capitalism and subject to its contradictions. Ghibli movies are here to sell you a product and from this angle, openAI is not so different because openAI also wants to sell you a product, and they will emulate popular art styles to help them do it. In addition, Ghibli and other companies have very obvious interests in edifying their art styles to something that seemingly "can't" be copied even by the most hardcore fans. It makes Ghibli the sole provider of such a style, which people like for its cozy vibes and warmth. Basically, if you want to feel cozy, put on a Ghibli movie - nothing else comes close. It ties into a point the essay Artisanal Intelligence makes (paraphrased): that before AI, artists claimed what they did was not so special and anyone could be creative. After AI, some suddenly switched to making creativity something only a select few are capable of. Artisanal Intelligence can be considered the foundation to this essay in fact and I highly recommend you read it.

Ghibli Studio is suing openAI on copyright infringement grounds. Miyazaki is often thought of as a progressive, in that his movies often talk about war, climate change and other social issues, and yet we see that he his not above using copyright law when it suits him. In fact, as if this whole saga wasn't complex enough already, when OpenAI made a huge deal about their image model being able to do Ghibli style, they were intentionally banking on the angle that they were somehow champions of the people for making art available to the masses -- Sam Altman sported a Ghibli-style AI profile picture of himself on Twitter for the longest time -- when in reality openAI is very protective of its own IP, including their AI models. More and more OpenAI is becoming a pariah in the open-source AI community who likes to run models locally, precisely because we can't run their models locally. OpenAI was also founded to make open-source AI (hence its name) but quickly changed course in 2016 to becoming a for-profit company.

And while you might agree with Ghibli's lawsuits on openAI, the proletarian distinction is understanding that the legal argument they make can also be used to repress the workers and not just other companies. In a DotB, bourgeois infighting has consequences for the workers.

How AI models work, briefly

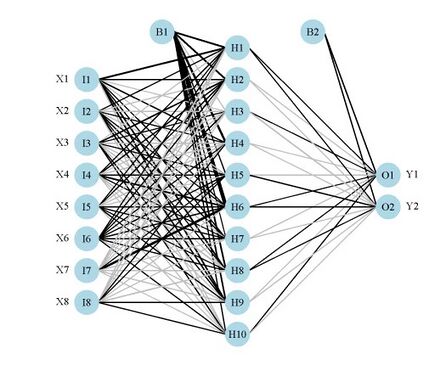

Regarding the results of these lawsuits, the first thing we see is that the courts often rule in favor of the AI company. This is because the companies bringing these lawsuits, most of which are in the entertainment industry, argue on the training of the model. Currently, training takes place by giving a model trillions of images/texts to work on, so that it can learn to recognize semantic patterns. In an LLM, the model starts to learn which words tend to follow each other, and which words are semantically associated to others. For example, Apple might be mathematically close-ish to "fruit", or "tree", but also to "Google" - and this is one way an LLM knows if you are talking about Apple the company, or apple the fruit. But this isn't simply a Markov chain or pattern-matching like any program can already do. Through this process, the AI creates a neural network of its own, often referred to as a 'black box'. This network only speaks to the model: it learns to create its own neurons through the training process and connects them in a way that makes sense to it. This is the true innovation of machine learning and neural networks. Here is an example of what a very simple neural network might look like:

Deepseek-r1 is also named "Deepseek:671B", with the B indicating how many neurons it has (in this case 671 billion, whereas the image above only has 22). When recalling information, LLMs don't simply copy and paste from their training data -- an argument that was propagated early on but has now thankfully been abandoned, because it was always a misconception that had no business ever being spread. When you send Deepseek a prompt, the data transits through its neural network, with each neuron having statistical weight (represented as a number that looks like 0.00392130854... with 16 places - a statistical weight) to create an output token, and then another, and another. How exactly your prompt travels through the neural network, which neurons the model uses, and how these neurons are connected is for the LLM to decide and the data wouldn't make sense to a human observer either way -- and this is actually problematic to researchers as they can't properly observer what goes on inside the black box. But it's not magic, it's math.

Image generation works similarly, and if you're interested I recommend this video from Computerphile narrated by Dr Mike Pound who is, incidentally, very excited about Deepseek and other open-source Chinese models because it allows him to do a lot of research in his field for which he publishes academic papers. The short of it is AI images are created from random gaussian noise, the same white noise that used to be on cathode TVs when you tuned to an unassigned channel. The model that creates the image is also a neural network. Early on when commercial image models appeared, a common argument I saw against them was that they "spliced together" thousands of images together to create an output, but this is not true and would imply that the neural network stores the source images somewhere in its file. They actually learn to undo the gaussian noise on a picture to which it was applied by creating their own neural network. Thus when making an image from scratch, a model starts with what was only ever random noise. Therefore, AI does not copy existing material: the training data is not even located within the model, only the resulting neural network (called the weights) is.

So far, the courts were not convinced by IP holders' argument that training AI constitutes copyright infringement in itself precisely because the training data is not contained within the model. Rather, the courts believe it falls under fair use - and I think that's a very important point to note.

For one, I made sure to use the word neural network in the paragraphs above because you are very intimate with one of those: your own brain. The human brain works on a network of neurons, i.e. it's a neural network. AI may "only" be an approximation of the human neural network (much like a camera is only an approximation of our own eyes but I digress), but let me ask this open question: if IP companies successfully stop AI training in a court of law and set a precedent, what stops them from coming after you? After all, in the process of learning to draw your own Ghibli scene on Krita, you had to study the Ghibli style, activating your neurons and "training" yourself to then draw that style. What stops them from making the claim that their style now is the IP, and not just the characters or the script, because models are able to replicate their style? Obviously they wouldn't come for artists immediately with the argument that "you worked just like an AI", but their argument would be to push for legislation that style can be copyrighted and trademarked.

On "copyright infringement"

We know full well that IP companies want to be your sole provider for their IP, and not just in the entertainment industry. Adobe wants to be the only company that does photo editing. Microsoft wants to be the only company that makes Operating Systems. It's in the DNA of any capitalist enterprise to create a monopoly. This is why they create IP in the first place: they wouldn't make their product (under a capitalist context) if there was no way to make money from it. If Shonen Jump wins its lawsuit against OpenAI, what would stop them from coming after you for drawing One Piece fan art, how would you prove it's not AI, how would you find the money to defend yourself in court? I'm specifically addressing the artists reading this here.

And yes, this has happened before AI was even a thing. People have found themselves having to pay thousands of dollars for "copyright infringement" when all they did was use a picture they found on Google, or create something that looks a little bit too much like a trademarked design. But this means we need to tear down copyright law, not reinforce it with precedent (or even lobbying, as we'll see just a few paragraphs below).

Nintendo is a well-known litigator over IP, to the point that they believe they own the whole monster-taming genre because they own Pokémon - but they didn't even create the genre, Shin Megami Tensei did. They have brought emulator developers to court and won, even forcing a man to pay back the millions Nintendo "feels" they lost to him hacking the Switch for others - a sum he will be paying off for the rest of his life, even as Nintendo was made aware he would never be able to pay everything back before he dies. They said "we don't care, we still want him to pay every cent". He is effectively Nintendo's slave. His name is Gary Bowser.[18] In many cases, these emulators are the only way to play old video games that publishers are sitting on and refuse to make available, simply because they don't believe it's a worthwhile investment from their part. But they won't release them in the public domain for fans to make them legally playable, because what if we find a way to make some money off this game in the future?

So we see that IP law is anti-consumer, because it prevents consumers from using the properties they know and love in a way that speaks to them. We see that IP law serves as a gatekeeper and not as a protector. Copyright doesn't encourage people to create (knowing that they'll be able to make money off of it), it does the exact opposite. And piracy plays a role in preserving lost media and making it accessible to the people.

I feel there's been an entire reversal of copyright and intellectual property, where suddenly self-proclaimed communists side with big companies just to stick it to AI. It feels completely backwards. It's not just about making One Piece fan art on DeviantArt that only 30 people will ever see, it's about making all IP free to use and remix. This is why we have Creative Commons licences. This is why we have fair use. Fair use has been used for decades by small creators of all kinds (including software developers and researchers) to create things, as it's the only tool we have to try and curtail the behemoth that is copyright law. Kneejerk reactions to anything with an "AI" label have come to the point where a self-proclaimed communist with a Deadpool profile picture verbatim told me he "didn't care about throwing fanfic writers under the bus if it stops AI". Not only is Deadpool intellectual property, the comic itself borrows heavily from fanfic culture -- without attribution or compensation, of course.

And yet IP companies already make heavy use of open-source and even copyrighted work, including exploiting fan artwork without compensation or any care for "the law" -- for example the clothing brand Zara has been repeatedly found to be appropriating designs from artists to put on their t-shirts without compensation. What happens when this is found out is they say "oopsie", remove the design from their line up (which they were going to do anyway), and then move on. The artist who made the original design doesn't have the resources to sue Zara, and so they keep getting away with it.

Microsoft is notorious for relying on open-source code: they even pioneered the embrace, extend, extinguish policy to turn open-source contributions made with free labor into their own IP.[19] They like open source and fan art when it suits them, and want to control it when it doesn't, because capitalism. And they know they can get away with it because they have money for lawsuits and you don't, and AI or not it doesn't change the fact that Nintendo can and will and has bankrupted people with a lawsuit if they want to drag it on, all over one instance of perceived infringement. Perceived infringement is incredible, because it allows them to say "well gosh we feel like we lost millions, yknow?" when they actually lost nothing - again because piracy makes a copy, it doesn't remove the original. It's the same argument the Motion Picture Association makes about movie piracy, they feel it makes them lose money because they count every instance of movie piracy as a lost ticket/DVD sale when in actuality, pirates would never have paid for the movie in the first place. These are arguments that were settled all the way back in the 2000s.

It is also notable that when ICE published a horrifying video pairing footage of agents abducting people from the streets with the Pokémon theme song, Gotta catch 'em all, Nintendo used the DMCA mechanism to take the video down but did not sue the Department of Homeland Security;[20] they only call the courts for people who can't afford lawyers (i.e. me and you) because these are open and shut cases. The DMCA mechanism is a US invention that does not exist outside of it and while it purportedly allows every creative to protect their IP with a simple form, in practice we see that it's mostly used by big companies because they're the only ones with the means to catch every instance of copyright infringement. The DMCA is not really legally binding, if you ignore it the copyright owner still has to sue you, the DMCA basically just asks you to take something down. So if you didn't have the means to sue someone for copyright infringement, serving a DMCA won't suddenly change that. But when it's Nintendo serving a DMCA, you know they have the means to drag you to court so you comply.

What proponents of these lawsuits will have to explain is: beyond "destroying AI", what exactly do they think these lawsuits achieve that's beneficial to them? Not even to the proletariat as a class, but to them specifically. What do you gain from blocking a Mario fangame on itch.io or closing down emuparadise while AI continues to be developed and new models are released hourly?

Shueisha has no leg to stand on in its lawsuit against Sora (the video generation tool recently released by OpenAI) because a- the training process has already been litigated and found to be fair use and b- the output is not a copy of a One Piece episode, it wasn't lifted off a DVD or anything. Therefore it's transformative, an integral part of fair use.

Their lawsuit will go nowhere but, it's also very important to note that this is going to be a verdict afforded to OpenAI as a big company, which is not afforded to common people. The fact that One Piece features in the training data is indisputable (but we shouldn't care either way where One Piece material ends up); the problem is only in how these IP companies present it. Yet when OpenAI pirates One Piece or uses anna's archive to download terabytes of books, they are excused as fair use. When we do so, we are litigated against by another IP company and forced to pay back millions for "perceived" damage.

Again, IP is not your friend. It's not there to protect you. Anna's archive is now being deplatformed while OpenAI has not said a word about it, despite knowing full well they got a lot of their training data from that website.

On corporate lobbying and indie artists

Speaking of copying, it's interesting that many people have gone back to the outdated "stealing" phraseology that we used to hear from RIAA anti-piracy ads -- the RIAA is the Recording Industry Association of America. But piracy is not stealing, and neither is training AI with a dataset; it's copying. There's a very clear difference between the two, and this was settled over two decades ago already. Yet some artists are now bringing the "stealing" wordage back, when they spent the entirety of 2021 telling NFT owners that they could just "right click -> save" their expensive ape drawing.

At this moment the RIAA is trying to enlist the help of indie artists to lobby against AI, when piracy is historically often what made small artists famous, and also gives them independence from controlling labels and even streaming services, as streaming services can ban you and your music for any reason without recourse. If you're not familiar with the RIAA, they basically represent the big three record labels: Sony, Universal, and Warner. If the RIAA gets its way with its AI lawsuits, it will force indie artists into an even more precarious position than they already are. Yet this hasn't stopped the RIAA from enlisting A2IM (The American Association of Independent Music) or MWA (the Music Workers Alliance) in recent legal actions against "unauthorized use of copyrighted music in AI training models". These two groups may not represent all artists, but a lot of "independent" record labels are members, and they happily joined the RIAA in these lawsuits.

Does AI music threaten your livelihood as a small artist? No more than your livelihood is already precarious because of lobbies like the RIAA, whose labels control your revenue share, distribution and market access without giving you any leverage or negotiating power. What the RIAA is afraid of is not AI but losing control over music, including your music. They are trying to retain their monopoly and limit your negotiating power. They claim to protect you against AI music so they can keep exploiting you. It's the same reasons they fought against piracy back in the day, and digital distribution before that. If they can't profit from it, then they don't want it to exist for anyone. The RIAA was even against mp3 players early on.[21]

And if you were not aware AI is capable of making music, I highly recommend you sign up on Suno[22] with a free account and play around with the tool, if only to see what AI is capable of right now, even if you hate AI. Know your enemy and all that.

If the RIAA were to win against AI, they would win leverage into how music is produced and distributed. Not only would they remove a potential competitor to their lobby, it would also reinforce their power to decide who can enter the music market, on their terms. They will make model developers pay for the music they train their models on, sure, but none of that movie will ever make its way to you. They will send packages containing thousands of songs for a lump sum, making a bit more money on it, but each sale will amount to only 1 cent going to musicians. In fact, judging from historical examples, it's very likely that if the RIAA can't destroy AI music generation entirely, they will suddenly flip: adopt it and try to control it so that you can only make or get AI music with their permission. And regardless of your thoughts on AI music, this shouldn't be desirable to anyone that actually cares about music period.

When the RIAA flips to adopting AI, their three big clients (Sony, Universal and Warner) will add in their contracts that they can use your music to train AI. After all, it already belongs to them when you sign with a label, and you will be no more protected as an artist than you are now. Either way, they win because they already own the monopoly on music. So instead of having Suno or other models to make music with, you will suddenly learn that you've apparently recorded an entire album for Sony - they will have access to AI generation, and it'll be legal because copyright. It doesn't belong to you.

(Update January 2026: Late last year, shortly after writing this essay, Suno was taken to court by Warner Music for copyright infringement. As part of the settlement, Suno agreed to partner up with Warner and has announced major changes to its service starting in 2026. One of them will be that models trained solely on Warner content -- presumably with no compensation to the artists that Warner claimed to fight for -- and that music downloads will now require purchase. So the service gets worse, Warner becomes a little bit bigger, and artists are still getting the end of the stick, just as I predicted when writing the essay. For now artists have to 'opt in' to being in the training data but I expect Warner will start making this a default clause in new contracts.)

Likewise, if Shueisha or others manage to attack the transformative aspect of fair use, then they can reinforce copyright and IP law in their favor by setting a precedent. And you're still not going to be protected if you are a creative, because you don't have the money to scour the web to find copyright infringement and litigate it in court. Even if lobbies such as the RIAA say they will protect you with their lobbying, they won't. You're on your own. But Shueisha will be very happy to come after you for drawing One Piece fan art for a commission (and then probably plagiarize it for their own promo art). They will be very happy to force you to pay what little money you make for the rest of your life to pay not actual damage, but perceived damage to their profits.

On patents and DRM

IP is more than just One Piece and A Song of Ice and Fire. IP is also software, medication and medical devices, patents of all kinds, or even shapes (like the Nike swoosh). These all form intellectual property. And yes, unironically, chatGPT models are also intellectual property: they belong to openAI who holds them as close trade secrets and would sue if someone were to publish even their outdated GPT-o3 model on huggingface.

Patents are even given out to game and software mechanics, and patents can even be bought and sold. There come to exist patent trolls, companies whose only business model is to buy up all sorts of patents and then sue anyone that they feel breaches the patent without authorization. If you remember "arrows guiding the player to the objective" in video games from the 2010s and wonder why they suddenly disappeared, patent law is why: a patent was filed for it, and so games either had to pay outrageous prices to the owner or find another mechanic that's not patented. Patents actively make things worse and gatekeep creativity instead of promoting it, and they are part of IP law.

And it's not just video games, examples abound in open software. For a long time Microsoft did not allow copyleft/open-source software on its Microsoft Store. Windows only supports Chromium and Gecko browser engines, by choice (used in Chrome and Firefox respectively). Speaking of Microsoft/Windows, Bill Gates' mother worked at IBM and asked executives (her co-workers) to give Bill a meeting to sell them his operating system. Except he didn't have an operating system yet. So, he bought QDOS from Gary Kindall for 50,000 dollars, renamed it, and then sold that to IBM. From the very start Microsoft has skirted the law around intellectual property, protecting it when it serves them and extinguishing it when it doesn't.

Amazon's Kindle format tries to control e-books, preventing you from installing any e-book but their specific DRM format to your Kindle. And because the Kindle format is so prevalent, some e-books are only available in this format and it's a pain to try and make them available in other file formats that can be read by non-Kindle e-readers.

Apple prevents you from installing apps anywhere but from their AppStore. This isn't for security reasons, it's just a mercantile decision so that you have no choice but to give them your money even after you've bought their device, as if that wasn't enough.

And with their leverage, these companies can easily extinguish other alternatives: Dailymotion tried to be the competition to Youtube (although DM was proprietary too), and Google's response was to derank Dailymotion from their search engine and promote Youtube links instead. Google also ran ads for Youtube on its own platform and prevented Dailymotion from running theirs. With software patents they could prevent Dailymotion from adding user-centric features to their video player, and today you go on Youtube to watch videos and nowhere else. This is directly tied to intellectual property because Youtube, Kindle, iOS etc are classified as intellectual property, being software, and they legally belong to a company and not to the people.

If they could have their way, Microsoft would only have you use Windows OS with the Edge browser, Microsoft Office to write with and Bing as your search engine. In Europe, they had to be brought to court several times to allow competition on their OS.

On Chinese open-sourced AI

I feel with the advent of commercial AI we now have to argue for basic liberation of IP that the USSR had settled a hundred years ago already. I feel I now have to explain why communists should not want copyright to exist. Like I said earlier, Dr. Mike Pound is very excited for Chinese AI models that are open-source, because he can use them in research freely. He can fine-tune their training for his needs and create things. I don't know what research Dr. Pound is involved in currently, but I know that neural networks have already been used in the medical field to help analyze radio imagery and detect diseases. In China, neural networks are being deployed right now for what is called 'precision farming': AI-supported irrigation and fertilization systems that deliver nutrients based on real-time soil data. This boosts soil health, fights against soil degradation, and works towards ensuring food safety.

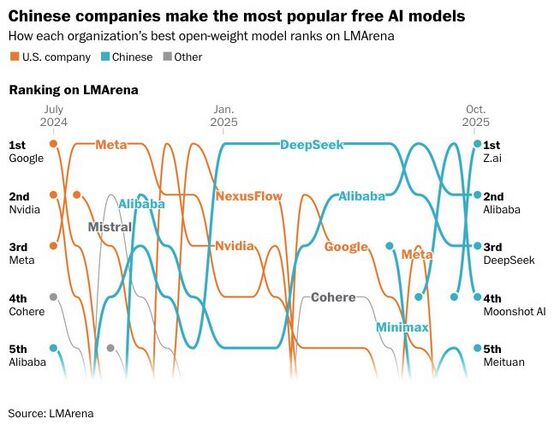

These models don't come out of nowhere: they rely on a whole host of prior open-source research and software packages that are freely accessible and usable. China, working under a socialist system completely different from the capitalist one we know, embraces open software and they are now leading the AI race while the US follows. In fact, the five most popular open-source AI models are all Chinese. This is incidentally also a very strong argument for China in the 21st century: just go on the r/LocalLLaMa subreddit, and you will find plenty of non-communists gushing about China because they're the only ones offering an actual competitive alternative to western, proprietary models.[23]

I often make the argument that if you don't like AI, you should like China because they are exposing how overvalued the western AI industry is. And if you like AI, you should also like China because they are putting out a ton of research and promoting more research by releasing their models for free. Basically, we should be more like China.

(The top 5 downloaded open-source models on LMArena are all from China.)

When we ask "how come western AI companies only focus on making AI a chatbot friend, therapist or question answerer" we get our answer: unsurprisingly, it's capitalism. They're not interested in making a product for public good (i.e. the proletariat), they're interested in making money. This is why OpenAI is discussing allowing erotica on chatGPT,[24] trying to stop the bubble from popping by getting a few more 20$ subscriptions, while China is actually using AI to solve societal-level problems.

AI in the Global South

It's no wonder that the global south is adopting AI, yet the anti-AI crowd mostly wants to focus on openAI who, while being a major player at the forefront of AI innovation -- they pioneered Chain-of-Thought that Deepseek has made popular, but keep GPT's chain of thought hidden from the user, which is counterproductive for researchers -- is also only a tiny part of a much bigger ecosystem that transcends IP and borders. It's missing the forest for the trees, literally. Iran recently published academic guidelines surrounding the use of AI (as related to me by a fellow ProleWiki editor currently studying in Iran): they allow LLM use provided the student notes the model and time of usage, and can prove they understand the material. Cuba is working on making its own Large Language Model, even under the deadly embargo they face from the United States.